Scenarios for the artificial intelligence application in medicine. Why isn't it that simple?

There has been talk a lot recently about machine learning developments in medicine: artificial intelligence (AI) analyses studies, makes diagnoses, develops new drugs, assists with surgeries... However, why are we still not seeing this promising technology mass adoption in clinical practice?

In this article, we will discuss what the AI application in medicine could be, what the barriers to its widespread introduction are, and how (in our opinion) they can be overcome. We will use radiology as an example because it is in this medicine area that we (the Celsus team) are developing our AI solutions.

Which AI are we talking about?

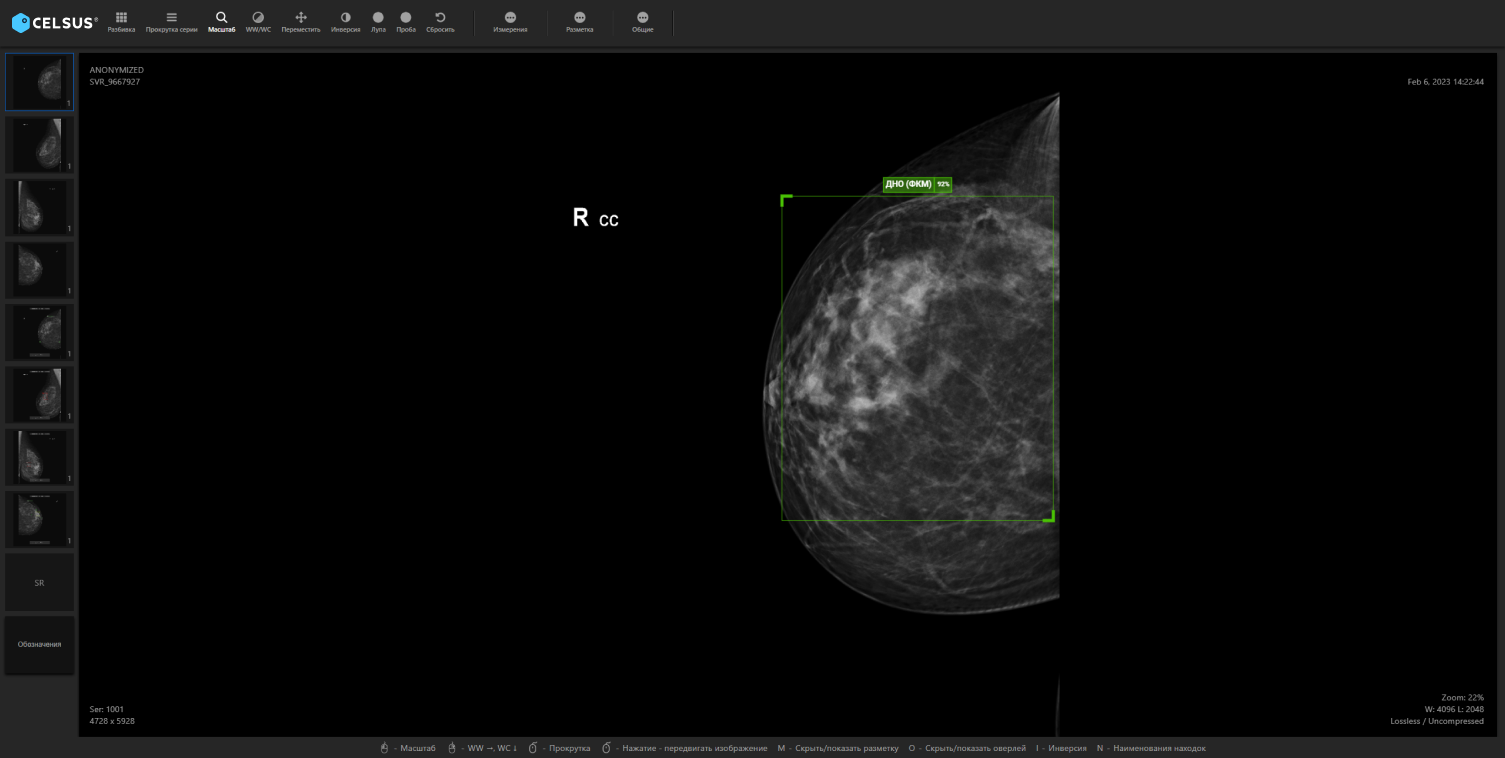

When people talk about AI in radiology, they are usually referring to Computer Vision technology. This is a technology that helps doctors analyse medical studies (X-rays, CT scans, MRIs, etc.) by sorting the list of studies according to the pathologies, highlighting "suspicious" findings risk, and automatically forming anconclusion.

However, don't think that such AI application affects only the doctor's work. Itsuse business effects extend to the entire diagnostic process, affecting everyone involved - the healthcare provider and patients alike. In addition, the effect is expected to be different for everyone. The healthcare provider, for example, is interested in increasing the work processes efficiency and reducing diagnostic costs. The doctor - in routine getting rid and insurance against errors. The patient wants timely, accurate diagnosis, effective treatment and human attention.

These goals all are in harmony with each other. If the AI system is able to reduce the workload on the radiologist, the doctor will spend less time and effort on the work same amount. This will enable them to devote more time to the patient or to deal with cases that are more complex. The healthcare provider will, in turn, benefit from the fact that the doctor's time is used more efficiently.

In the end, everyone wins: cancer is diagnosed earlier, the health system spends less money on treatment, and patients live happily ever after. Sounds promising, doesn't it? Nevertheless, what might it look like in reality?

Scenarios for the AI application in radiology can be considered in different classifications. Let's consider them two.

Autonomy level

This classification is often used in the unmanned vehicles development. The autonomy minimum (zero) level is assigned when a person drives alone, without any assistance from the AI. The maximum (fifth) level indicates that the AI is fully autonomous: a human is just a passenger in this case and may not even follow the road.

This classification could easily be transposed to machine learning any application - including radiology. We suggest that we drop the autonomy zero level, where the radiographer does not use AI at all in his work, and start with the first.

Level 1: assistance to the radiologist.

At this autonomy level, decisions are still taken by the doctor, but the doctor has new tools. AI can help him or her with morphometric and automatically take various measurements - for example, to measure an organ volume or a neoplasm size. This is a rather routine operation for the radiologist, and the AI use can save the doctor time.

Level 2: partial autonomy.

This is the level at which most AI systems for radiology are now located. Here too, the physician takes decisions, but the AI aid is no longer routine. It can detect and highlight the abnormalities localisation on an image and assess the diseases progression.

Level 3: conditionalautonomy.

Here the AI makes decisions only in given cases. For example, when there is a large flow of tests (in screening) it can 'screen out' those tests where the system is highly confident that there is no pathology - in which case the doctor does not even look at such tests. AI with this autonomy degree can also be used in emergency medicine. For example, a person is admitted to hospital with an injury, and the AI system analyses his or her examination and concludes that it is highly likely that the patient may have a life-threatening injury. The surgeon is notified and the patient is taken immediately to the operating theatre.

Level 4: high autonomy.

In this (rather fanciful now) scenario, the AI makes the decisions in most cases, and only in some special, complicated cases may it seek the radiologist opinion.

Level 5: full autonomy.

Here, all decisions are made by the AI itself, and the doctor is not involved in the process in any way. This scenario is the science fiction realm absolutely out. In addition, we doubt that this AI autonomy level will ever be achieved. We will tell you why a little later.

Interaction with the doctor degree

Another classification shows how "noticeable" an AI system introduction becomes for a doctor.

Level 1: no interaction.

The AI application remains almost invisible to the radiologist in this scenario. For example, the doctor comes to work and opens the examinations list to be analysed - and this list is sorted by the AI system not just by date or randomly, but by priority. The examinations that have pathology showing signs a high probability come first, and after that the doctor looks at the ones that the AI thinks are "normal".

Level 2: interaction, low and medium degree.

Here the AI can act as a 'second opinion' - that is, analyse and describe the study in parallel with the doctor. In opinion a difference event, the conflict can be resolved by, for example, involving another doctor. Furthermore, at this level, AI can act as an "airbag": if the doctor has marked an examination as "normal" and the AI has found abnormalities signs, the doctor receives a notification from the system. This enables the doctor to revisit the examination, double-check himself/herself and, if necessary, change his/her decision.

Level 3: interaction, a high degree.

In this scenario, the doctor immediately sees the AI system all the results - including the abnormalities location and the conclusion. He can use these results to speed up the examination analysis process.

Implementation problems

So what prevents the above scenarios from being put into practice? There are many barriers, but here we describe only those that we - as radiology AI systems developers - consider the most significant.

Therefore, the first problem is that there are still no cost-effective scenarios for the AI application in radiology and medicine in general, and few studies comparing different scenarios have been conducted.

Yes, if you dig through the scientific literature, you can find various articles describing the AI effectiveness studies results - but firstly, they are still quite few, and secondly, they are often limited in methodology and scope. In addition, such studies are often based on retrospective rather than prospective data (i.e. on medical studies already analysed by doctors) - and not always properly verified. In addition, this tells us little about any real, final effect from such tools introduction. And what is there to measure it in - in money, in lives saved?

In addition, here a certain contradiction arises: in order to conduct long, high-quality trials on prospective data, medical institutions need to be interested - but medical institutions are not interested in conducting them, because they need an understandable economic effect. All this is complicated by such lengthy studies high cost. Developers often cannot afford to pay for such research either: they are trying to survive in the still-emerging medical AI market. Such systems development is in itself quite expensive, time-consuming and complicated.

The second big problem is that there are still no best practices in the world - best implementation practices - and that there is no practice for calculating the economic effect. This, in turn, will depend on many factors: implementation scenarios, AI quality metrics, and the healthcare facility characteristics. The challenge is to strike a balance between the developer's profits, the medical organisation financial interests and the overall social and economic impact.

Another problem is a clear system lack for comparing AI services. The quality metrics claimed by the developers - such as AUC, accuracy, sensitivity and specificity - do not guarantee that the system will be useful for that particular healthcare institution. Moreover, the testing practical implementation requires resources a lot from the organisation: IT integration, a data set creation for the tests, staff training, and so on. Not every institution can afford this.

In addition, the last big problem is doctors themselves negative attitude towards AI. The reasons for this can vary from the fear that AI will replace the doctor, to a reluctance to spend the time to implement the technology, to why it is needed understanding a general lack. The dilemma for developers here is that AI systems raw versions can indeed scare doctors away - but without feedback from doctors and work on real data, making a raw product useful and with good quality is impossible.

Proposed solutions

We should say right away that this point is purely subjective. We will highlight what we think are the main objectives:

- searching for optimal AI application scenarios;

- simplifying and making it cheaper to test and compare AI systems;

- overcoming the doctor's negative attitudes.

There is only one-way to get AI systems to the point where they are truly useful: if developers, clinicians, healthcare providers and the healthcare system as a whole work together. Whatisthemeachrequired?

- From the government and the healthcare system - to expand AI experiments. Such an interesting and quite large-scale experiment is already taking place in Russia - but so far only in Moscow. In addition, various grants should be allocated not only for the AI products development, but also for full-scale controlled trials. Moreover, in order to make it easier for medical institutions to test competing services, it is necessary to create a single platform into which AI solutions will be integrated - so the organization can check how each service works on its specific data, and choose the one it needs.

- The developer is required to expand its AI systems monitoring: responding quickly to incidents and publishing error reports. The developer's openness in the medical AI field is very important: a system error can not only waste the doctor's time, but also cause harm to the patient. Therefore, in our opinion, the developer should also publish open reports with the service both correct and incorrect operation examples. In addition, it is important that medical experts are involved in the developers day-to-day activities (we have described the doctors roles in our company in this article).

- The medical community needs to create programs for the AI system's optimal and safe use. It is important for doctors to fully understand AI benefits and what features work really well for them. After all, it is for them that such products are created! Moreover, of course, it is important to make doctors aware that AI is an assistant, not a competitor.